Designing AI accelerator cards with currents over 1000A

The latest AI accelerator cards for the data centre such as Nvidia’s A100 card and the Open Compute Project are rapidly moving to 48V and over 1000A of current, challenging the power management requirements, both for the chip and the board designs. A new power architecture and both lateral and vertical packages are needed to power the boards.

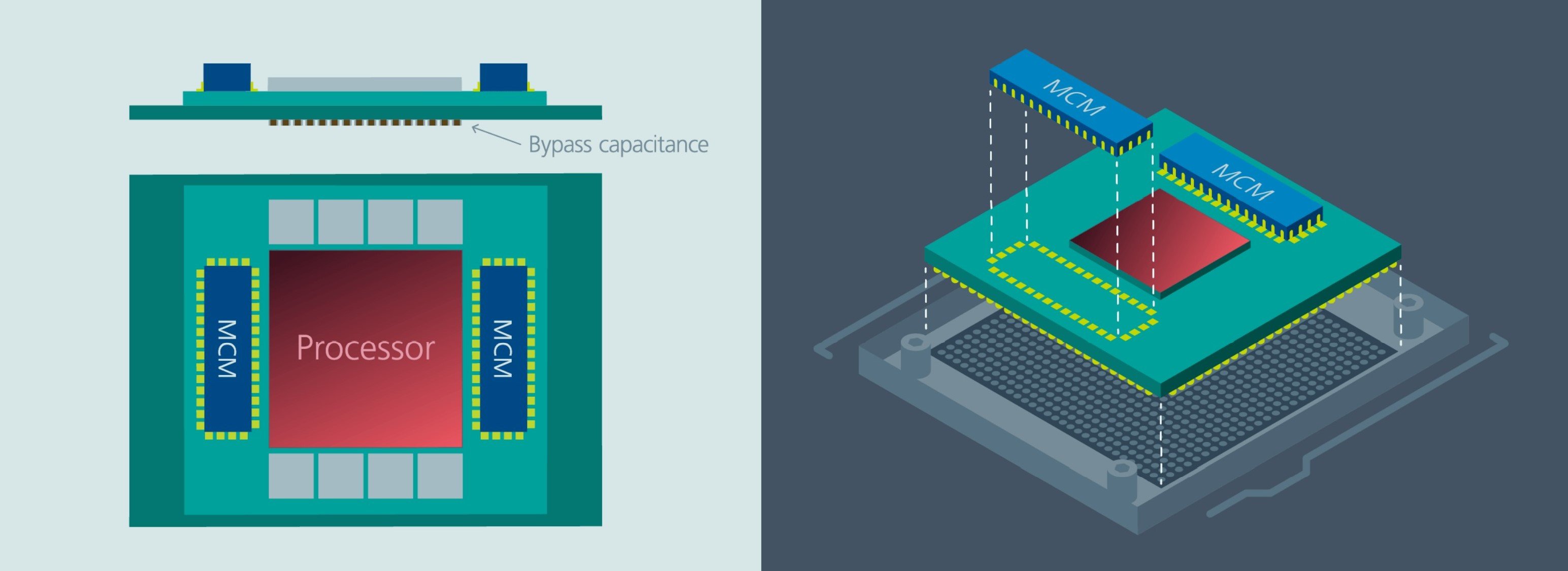

“We support an accelerator card that’s very similar to the Nvidia card,” said Robert Gendron, Corporate Vice President for Product Marketing & Technical Resources at Vicor. “We support delivery for the AI processor itself – if you look at the card, there are three chips, with one supporting the primary rail. There’s two other devices for the memory and the other rails of the processor,” he said.

The high current requirements means existing multiphase point of load converters powering the primary rail have run out of steam, he says.

“Customers use us for these AI accelerator cards and the OCP cards, so we designed the chipset specifically to fit an AI accelerator card where the power required exceeds that of what a multiphase could accommodate,” he said. “It would consume too much area and too much loss, transmitting the current from the voltage rail to the processor and the noise contribution would be challenging for a conventional multiphase converter.

“We use a different architecture to support 400A and above, and different packaging style to create the high density module. This Factorised Power Architecture does not use a series of inductors to supply current to the processor. Our solution has almost no energy stored within the device so its much smaller and provides better noise and transient performance. We do use a magnetic structure, a magnetic core, that provides and voltage division and current multiplication.”

Next: Vertical power for AI accelerator cards

“This is more like an ideal transformer where there is no energy stored, versus an inductor which acts as an averaging device, so it’s harder for the inductor to respond,” said Gendron.

“We have integrated our magnetics along with the silicon into the package so it’s a higher level of integration,” he said. “It’s a true 3D power package with a FR4 type substrate, components on both sides and overmolded, with a plating process – there is our own controller that’s a mix of analog and digital control. Part of the higher density we have comes from the high switching typically over 1MHz compared to 300kHz. The loop itself is set up with a speed of a conventional processor of 200 to 300kHz.”

Board makers are running to keep up as currents move over 1000A.

“The growth of AI processing in the cloud with accelerator cards or racks means the power consumption has quickly gone form 10 to 15kW to 40kW or more,” said Gendron. “This dramatic increase in power consumption of the board and the rack itself no one really saw coming, the speed at which AI is being adopted in the cloud so the conventional multiphase designs were outpaced, there was no way it could keep up.”

This means mounting the power devices even closer to the AI chips.

“Right now we are working with several customers on 1000A designs of continuous currents – with those solutions we are using the same architecture but reintegrating to vertical power delivery to put the MCM underneath the processor to further minimise the losses,” he said.

“The FPA resolved a lot of the losses from a multiphase topology but even that runs into a wall at 1000A, so we have a power delivery topology that will further take away the losses and not waste it through the PCB,” said Ajith Jain, strategic accounts manager for data centre and high performance computing at Vicor who works closely with the board designers.

“The efficiency the chipset is definitely important but what is even more important is the losses in the board, the Power Distribution Losses (PDN),” said Gendron. “This has gone from 400uO on a server board but if you are delivering 500A that means you are burning 100W in the board so its a maximum efficiency of 75 percent. On these accelerator card we reduced that to 50uO, so we can show and 96.8% with 12W of loss. With vertical power delivery with 1000A we have designs 5uO of loss on the board – with 500A that’s 99.7% overall – this is why the PDN losses are so important as they dominate the losses.

This also fits into existing board designs.

“There’s nothing unique that needs to change in the board design,” he said. “You already have a large bank of bypass capacitance there with vias, so power is already routed down there and back up again.”

There is also a system advantage that drives more memory in racks to boost the AI performance.

“If you look at the ATX power supply that puts out 12V they were using PCIe and a flying cable to deliver power over and above the power from PCIe,” said Jain. “48V allows one connection and is able to put the power conversion at the point of load and this allows more memory that more than quadruples the AI performance – this has given them a massive leg up at the rack level, so it’s not just the power efficiency,” he said.

Related articles

- POWER INTEGRITY FOR WAFERSCALE AI WITH 400,000 CORES

- 22A ZVS BUCK REGULATOR HAS WIDE TEMPERATURE RANGE

- POWER SYSTEM HELPS REBUILD CORAL REEF

- 750W REGULATED DC-DC CONVERTER FOR 48V LEGACY SUPPORT

Other articles on eeNews Power

- 48V to 12V Converters for xEV Applications

- Using redundant DIN rail power supplies for system reliability

- II-VI launches power technology division

- Dürr aims at automotive battery makers with Techno Smart deal

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News

If you enjoyed this article, you will like the following ones: don't miss them by subscribing to :

eeNews on Google News